Блог:ROSA Planet

A technical blog of ROSA Laboratory.

All content is published under Creative Commons Attribution-ShareAlike 3.0 License (CC-BY-SA)

Please, subscribe to RSS/Atom feed. If you have any questions do not hesitate to contact us

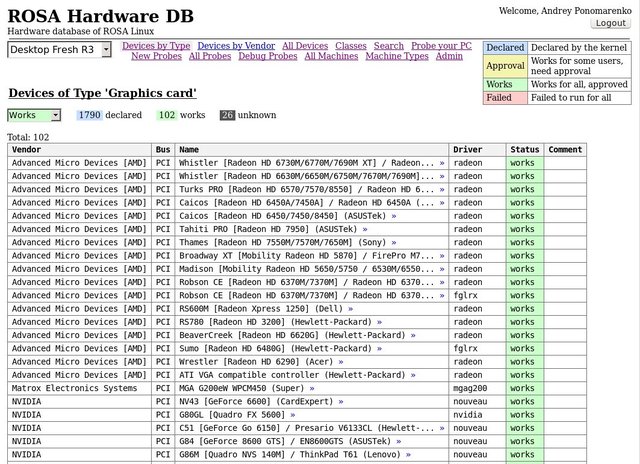

ROSA Hardware DB

The database is now available at Linux-Hardware.org.

Today the market offers a huge variety of configurations of personal computers. In the development of the ROSA operating system we make considerable efforts to support all possible configurations.

Five years ago, when developing first versions of the operating system and, until recently, we used the industry-standard method of user interaction. If the user encountered some problem, then he reported about it on our forum or bugzilla. The support team then began to ask the user to submit characteristics of the computer, system logs, etc. All these numerous data were collected in comments to the appropriate bug in bugzilla and then analyzed by the developers. The main disadvantage of this approach was that the user was required to perform too much actions, so the debugging of the problem stretched into weeks, and sometimes months.

To simplify the process of interaction with users, we have developed the HW Probe Tool. The tool is designed to collect all the necessary information on the user's computer to analyze and debug his problem. In this case, the user is required to perform only one command:

hw-probe -all -upload

One can run this command on the installed system or in the Live-mode. It is highly recommended to run this command as root in order to upload more details about all devices installed on the computer. And it's desirable to connect the maximum number of peripheral devices available to analyse them too.

As a result of this command, information about all hardware devices on the computer, the system initialization logs and other information will be uploaded to our database for subsequent analysis by developers and support team. User in this case will receive a link to the probe of his computer, that can be attached to the message on the forum, bug or shared with knowledgeable people who can help to fix the problem (the probe example for ASUS N73SV is here). As a result of this interaction mechanism, problems on users' computers are now analyzed and solved much faster.

The hw-probe package is already included in ROSA Desktop Fresh R4. Please update the package before using it in order to upload most complete test results to the database. Users of other versions of the ROSA operating system must install the package from this directory.

The database is available at hw.rosalinux.ru/.

On the basis of all the collected probes, as well as static analysis of the kernel drivers, we automatically create the database of supported hardware. You can find the DB on the website hw.rosalinux.ru/. You can, for example, look at the list of all tested graphics cards or the list of all WiFi-cards, support for which is declared in the kernel. You can also view the list and the classes of all tested PC models. For the classification of devices we use kernel classification of appropriate device drivers. For PCI and USB devices additionally we use thin classification by the class id of the device.

In this note, we invite all users of the ROSA operating system to upload probes of their computers in order to participate in creation of the ROSA Hardware DB. If some device does not work, please also post a message about it to our forum or bugzilla.

ROSA Desktop Fresh R4 Is Out!

The ROSA company is happy to present the long waited ROSA Desktop Fresh R4, the number 4 in the "R" lineup of the free ROSA distros with the KDE desktop as a main graphical environment. The distro presents a vast collection of games and emulators and the Steam platform package along with standard suite of audio and video communications software including the newest version of Skype. All modern video formats are supported. The distribution includes the fresh LibreOffice v4.3.1, the full TeX suite for true nerds as well along with best Linux desktop publishing, text editing and polygraphy WYSISYG software. The LAMP/C++/… enviroment packages are waiting to be installed by true hackers.

The R4 distribution is recommended for both the home users who want to communicate and surf the web and for the experienced ones who'd like to tweak the system their own way. ROSA Fresh R4 is based on a new rosa2014.1 platform which will be supported for 2 years and will serve as a base for several future releases.

Unlike R1, R2 and R3 releases, this release uses different repositories based on a new rosa2014.1 platform. All users of previous ROSA Desktop Fresh releases are highly encouraged to migrate to R4 release, since old releases are no longer supported. We recommend to install R4 over existing system from ISO image (if you have a separate /home partition, you can reuse it to save all your data), but it is also possible to update existing systems without re-installation.

Automated Metadata Updates in Urpmi

Maintainers who constantly deal with development branch of ROSA often meet a problem with outdated metadata when trying to install a package using urpmi - it often happens that a newer version of the pakcage has been already built and published, but the local metadata still contains only information about old version of the package which is now missing. If this is the case, one have to launch urpmi.update and try to install necessary package again. To be sure, one can simply launch urpmi --auto-update, but execution of this command can take significant time and usualyl maintainers don't want to wait for it.

A good news is that in rosa2014.1 development branch (which should become ROSA Desktop Fresh R4 soon) we have improved urpmi to automatiaclly update metadata and relaunch itself in case when something goes wrong with package download and it is likely that metadata update will help. This behavior is the default one in rosa2014.1, though one can disable it by means of «--no-restart» option. Note that to avoid looping, in any case the metadata will be updated only once - if this doesn't help, then urpmi will fail as before. In addition, you can specify a timeout for urpmi to wait until trying to update metadata. This option will be useful for ABF, since sometimes package builds fail due to the reason that old version of some package has been already dropped from repositories, while metadata for the new version hasn't been generated yet. When ABF will adopt a new urpmi, the number of such failures should decrease.

The new urpmi feature is primarily useful for maintainers. Most of ordinary users rarely meet such kind of problem, at least while they have automated updates enabled - the thing is that in order to perform automatic updates, urpmi.update is launch automatically on a regular basis, and updates in repositories of the released systems doesn't happen as often as in development branches. Though users are very different, so it is likely that some of them will notice that the number of routine actions necessary to update a package has decreased a little.

So, enjoy and be ready for the next ROSA release!

Spec-gen - Automated Spec File Generator for GNU Autotools and CMake-based Programs

Similar to many other distributions, ROSA pays a lot of attention to automation of different maintainers tasks and tries low the «entry barrier» for newbies. One of the problems faced by almost every newbie who wants to build some application for ROSA is creation of spec file to build an RPM package for our distribution. In many cases spec file can be generated automatically — for example, we have corresponding tools for modules of Python, Perl, Ruby, etc.

However, many programs for Linux are developed using comiled languages such as C/C++ and are built using GNU Autotools, CMake and analogues. Up to now, for such programs we only provided spec file templates and recommendations in Packaging Policies. This is normally enough for experienced maintainers, but newbies often experience difficulties with the very first step of spec file development. That’s why we have developed a new tool named spec-gen which is able to automatically generate spec file template on the basis of analysis of tarball with application source code.

The tool is very easy to use — just install spec-gen package in your ROSA installation and launch spec-gen with tarball name as an argument:

$ spec-gen my_tarball-1.0.tar.gz

By mean of -g, -s, -l and -u options you can specify group, summary, license and URL of the package to be created. You can omit these options and populate corresponding fields with data after the spec file is created. The tool try to guess package name and version on the basis of archive name — the guess is that most archives with source code are named in the «<program_name>-<version>» fashion. The tool supports all popular archive formats, but if you need to add support for more formats — feel free to send us a request.

During its work, the tool will look through archive content and check if it contains configure.in, configure.ac, CMakeLists.txt, *.cmake or setup.py files. If the latter file is found then the tool will simply launch python setup.py bdist_rpm5 command and provide your with a spec file created by it. In case when GNU Autotools or CMake files are detected, spec-gen will analyze them and detect which ROSA packages should be installed to build your application — that is, the tool will try to automatically create a list of Buildrequires for your new package.

We recommend to launch spec-gen in the system for which you want to build a package, since it uses information from system repositories about package provides and files by means of urpmq and urpmf programs.

As a result, spec-gen will provide your with a spec file with filled header, list of BuildRequires, %prep, %build and %install sections. Adjustment of the list of files in the %files section, splitting the package to subpackages and other complex tricks should be performed manually. To be sure, one can’t guarantee that generated BuildRequires list is completely correct — it is possible that some dependencies are not mentioned explicitly in build scripts or spec-gen makes a wrong choice among several alternatives. Nevertheless, in most cases the tool works correctly and provides you with a spec file that ca be used to build a package. It is likely that hte only task left to you is adjustment of the %files section.

It is possible that in future we will implement even more automation — for example, we can try to automatically build a package using generated spec file and then automatically adjust the %files section on the basis of build results. Actually there are a lot of possible improvements in this area, and everybody is welcome to provide su with feedback, share ideas and send patches. The source code is available here — https://abf.io/soft/spec-gen-dev.

Finally we are glad to note that the basis of the spec-gen tool was developed by Andrey Soloviev, a student of Higher School of Economics, during his practice in ROSA Company.

Boot and Install ROSA from your own HDD

There are many ways to install ROSA — for example, you can use ISO image located at your hard drive. However, a trick with ISO described in our wiki requires some full-functional operating system to be installed in your machine to perform some preliminary actions. However it can happen that your system is broken and neither you can boot from external device. Our colleague Sergey Sokolov recently met such a problem, and below he describes a solution that helped him to bring his system back to life.

Since I am one of ROSA developers, it is no wonder that I have installed a development release of ROSA Fresh R4 to my notebook (at the time when we even didn’t have an Alpha release). Once a day I decided to update my system to the current state of repositories. Unfortunately, it turned out that exactly at that moment a lot of system stuff updates (systemd, glibc, etc.) were ongoing. And I was so unlucky that my system refused to boot after update.

It would be nice to launch Live CD or just reinstall the system, but my machine didn’t have CD/DVD recorder, I didn’t have a boot USB flash and there was nobody near me to prepare such a boot device for me! However, I remembered that I had an ISO image of ROSA on my HDD.

I took a look at my partition table:

# fdisk -l /dev/sda Disk /dev/sda: 480.1 GB, 480103981056 bytes, 937703088 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Device Boot Start End Blocks Id System /dev/sda1 2048 33556479 16777216 82 Linux swap / Solaris /dev/sda2 33556480 96471039 31457280 83 Linux /dev/sda3 96471040 937703087 420616024 5 Extended /dev/sda5 96473088 937703087 420615000 83 Linux

Here /dev/sda1 was a swap which I didn’t use anymore, /dev/sda2 was my root and /dev/sda5 was a /home.

The system failed to boot normally, but I still had initrd loaded and dracut console. And that turned out to be enough to do the following actions:

mkdir /mnt mount /dev/sad2 /mnt mount -o bind /dev /mnt/dev mount -o bind /dev/pts /mnt/dev/pts mount -o bind /proc /mnt/proc mount -o bind /sys /mnt/sys mount /dev/sda5 /mnt/home chroot /mnt dd if=/home/path/to/ROSA.FRESH.KDE.R3.x86_64.iso of=/dev/sda1 bs=8M touch /boot/resque.iso vi /boot/grub2/grub.cfg

In grub.cfg, I found rescue.iso item and edited it as follows:

### BEGIN /etc/grub.d/43_resque ###

if [ -f /boot/resque.iso -o -f /boot/sgb.iso ]; then

submenu 'Repair tools' {

if [ -f /boot/resque.iso ]; then

menuentry "Boot rescue CD" {

linux (hd0,1)/isolinux/vmlinuz0 boot=live iso_filename=/dev/sda1 root=live:/dev/sda1 rootfstype=auto ro rd.live.image rhgb splash=silent logo.nologo rd.luks=0 rd.md=0 rd.dm=0

initrd (hd0,1)/isolinux/initrd0.img

}

fi

Finally, I synced filesystem and rebooted. And now I had some kind of recovery partition which could be used to install a system or launch it in Live mode. Just not forget that we should not format sda1 partition when installing a system!

Rosabootstrap - setup a ROSA chroot in any Linux

It’s not a secret that some developers of ROSA and OpenMandriva work on our distributions every time they have a small chance to do this. And it is not surprising that sometimes they just don’t have a ROSA/OpenMandriva system under the hand when they have a half an hour to spend it to development. Downloading ISO image and setting it up is not a good solution, since it requires some time and machine resources.

However, now they can setup a minimalistic chroot with ROSA or OpenMandriva in any Linux distribution which provides Shell and Python (even rpm is not necessary!). Thanks to Robert Xu, now we have omvbootstrap and rosabootstrap tools that allow doing this easily.

In case of ROSA, just clone the rosabootstrap project:

$ git clone https://abf.io/soft/rosabootstrap $ cd rosabootstrap

or download and unpack the tarball:

$ wget https://abf.io/soft/rosabootstrap/archive/rosabootstrap-master.tar.gz $ tar xzvf rosabootstrap-master.tar.gz $ cd rosabootstrap-master

And launch the script with necessary parameters:

$ sudo ./rosabootstrap -d -a x86_64 -v 2012.1 -c 2012.1 -m http://mirror.yandex.ru/rosa/rosa2012.1/repository/x86_64/main/release

That’s all — now you should have 2012.1 folder with chroot, so just go to it and enjoy the coding:

$ sudo chroot 2012.1

Usage of our developer tools in upstream

During the development of operating systems, our developers and maintainers use a huge variety of program tools (in order to build packages, analyze code quality, verify changes in code, etc.). Most of the tools are available in almost any repository, such as, for example, rpmbuild, gcc, rpmlint, check, valgrind, diff, etc. But sometimes there are tasks for which tools have not yet been created. In such cases, we create our own tools for developers. If these tools can be helpful not only for us but also for the community, then we publish their source code.

An example of one of the non-standard tasks was to analyze the backward API/ABI compatibility of system libraries in our operating system ROSA. Because the number of libraries in the system reaches several thousands, the manual tracking of changes was too difficult task. Therefore, we have developed the ABICC tool, that can automatically analyze the compatibility of changes in libraries. The source code of this tool have been published and gradually more and more upstream developers use this tool to control API/ABI compatibility of interfaces in their libraries. As a result, our maintainers can easier update appropriate packages for such libraries.

Examples of libraries successfully using our tools are: Pacemaker, MySQL++, Wireshark, Glibc, Enlightenment, libDAP++, libapt, Barry, PySide, PLplot, etc. Also, quite a number of library developers prefer to use our special service Upstream Tracker, where they can be free add any library and monitor changes in its API/ABI interface. Examples of such libraries are ImageMagick, V8, etc.

Our most popular open-source tools for developers can be found here. Among them are the following tools:

- ABICC

- a tool to check backward API/ABI compatibility of system libraries.

- PkgDiff

- a tool to classify files and visualize changes in source packages.

- Java ACC

- analogue of ABICC for Java libraries.

- API Sanity Checker

- an automatic generator of automatic unit tests for C/C++ libraries.

- ABI Dumper

- extract ABI structure from the debug-info of a shared library.

- Vtable Dumper

- list content of virtual tables in a shared library.

We encourage upstream developers to use our tools. This can improve the quality and stability of their API/ABI interfaces. And moreover this simplifies updating of appropriate packages in operating systems.

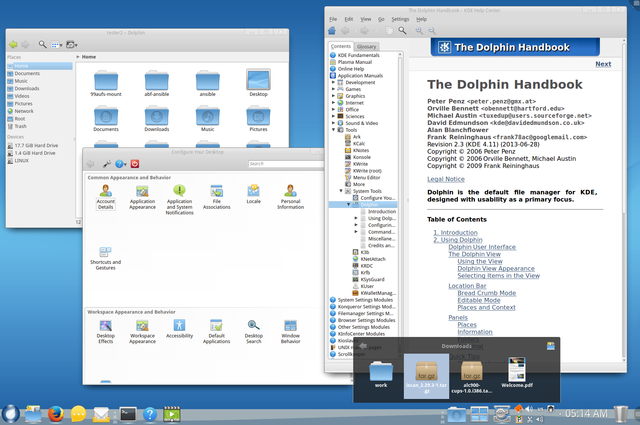

ROSA Desktop Fresh R3 KDE

Good news, everyone!

ROSA Desktop Fresh R3 release is out with KDE desktop environment.

ROSA Desktop Fresh R3 is a regular update of the ROSA Fresh platform. The R series of distributions is targeted to those users who looks for fresh and full-functional software. This series is developed by ROSA with significant help of community. The release includes all updates, fixes and improvements implemented since the time the previous R2 version was released.

Users who already work in ROSA Desktop Fresh R2 are able to update their systems to ROSA Desktop Fresh R3 from official repositories.

Newbies can easily download and try it, without reading the notes below.

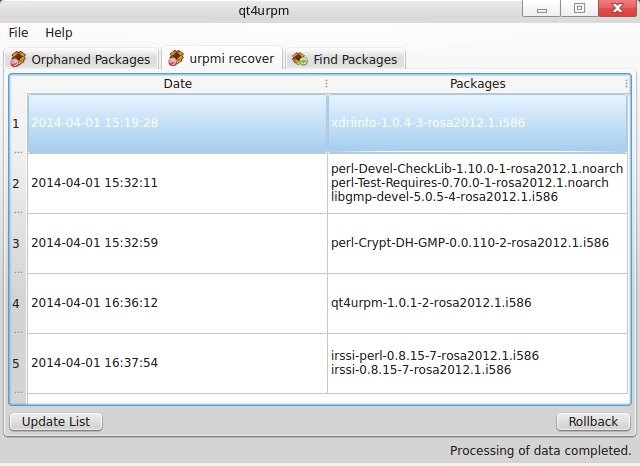

GUI for urpmi.recover

Last week we introduced urpmi.recover - a tool able to rollback the state of your system's package base. The new tool is already used actively and we have got a lot of useful feedback and fixed some issues.

One of the main concerns with urpmi.recover is that it is not very convenient to select a date or number of transactions to be reverted using command-line interface. To solve this problem, we quickly implemented a simple GUI for urpmi.recover; to be more precise, we have extended qt4urpm to support the new tool.

Qt4urpm is a small program developed several years ago by members of MandrivaUser.de community to perform two operations with urpmi repositories - file search and orphan detection and removal. To be sure, such a functionality is partially provided by Rpmdrake, but qt4urpm is much more lightweight (it is written in Qt) and faster. Despite its simple interface, it is sometimes is even more functional than Rpmdrake - for example, by means of qt4urpm you can remove only selected orphan packages. And when working with qt4urpm, you will rarely need to perform more than three mouse clicks.

So we have decided that a simple and straighforward GUI for urpmi.recover will perfectly fit in qt4urpm, and it didn't take much time to implement this. Install the freshest version (2.0) of qt4urpm, launch the program and select the urpmi.recover tab. Here you will see a list of transactions sorted by date. For every transaction, a set of packages is displayed which were installed during it:

Now select any transaction and press Rollback - urpmi.recover will revert all transactions starting from the selected one. That's all, folks:)

Maybe in future our GUI experts will implement something more beautiful, but from functionality point of view, qt4urpm already provides all the information you need to play with you package base state. We hope that it will help you to avoid getting lost in rpm transactions and to feel more comfortable when rolling back your system to some date in the past.

Spec-cleaner and rediff patch - New Helper Scripts for Maintainers

Maintainers' work includes a lot of routine tasks — adoption of spec files to match evolving packaging policies and build tools, adoption of existing patches for new upstream versions, update of package file lists and so on. Some of such tasks are performed automatically by build tools — rpmbuild itself and auxilliary scripts from spec-helper package. However, these scripts by design can’t solve a lot of problems — in particular those that require modification of spec files.

To simplify maintainers' life a little more, we have included two new scripts to the spec-helper package. The scritps' names are self-explaining — spec-cleaner and rediff_patch. These scripts are not launched automatically during the build; they are intended to be used by maintainers manually. Update spec-helper package in your system and you will get these scripts in your /usr/bin.

Spec-cleaner performs the following actions:

- removes obsolete declarations that makes no sense nowadays — for example, definitions of BuildRoot and Packager, requirement on install-info, buildroot cleanup and so on;

- modifies formatting of macros and variables — according to our policies, variables in spec files should be emraced with braces — %{const}, while for macros braces should not be used — %{macro}. Note that spec-cleaner only reformats macros and variables known to it. If you have some entities defined by your own, the script will leave them «as is»;

- formats Summary — capitalize the first letter and removes the dot from its end;

- removes explicit declarations of %{name}, %{version} and %{release} variables;

- replace obsolete macros with modern analogues;

- … and performs a lot of other small tasks which will help to make spec files smaller and cleaner and avoid claiming from rpmlint.

When developing spec-cleaner, we try to avoid situations when the new spec-file becomes incorrect in some sense. Due to this reason, some spec-file issues that may seem to be easy to fix are not automatically fixed yet.

Meanwhile, old developers may notice that spec-cleaner is a modern and much powerful analogue of old macroszification script which was present in spec-helper for years. Now this script is dropped — use new spec-cleaner instead.

As for Rediff_patch, then you have likely guessed that it tries to rediff an existing patch against a new tarball with source code. Please read the instructions below carefully before trying to use this script:

- rediff_patch should be launched in the folder of a cloned Git project, where spec file and patches reside. Spec file is used to determine how the patch should be applied;

- the new tarball should be placed in the same folder;

- the script should be launched in the following way:

rediff_patch <patch_ro_rediff> <tarball>

If you folder contains only one tarball, then you can omit second argument.

During its work, rediff_patch creates rediff_patch folder, unpacks tarball into it and tries to apply the patch to the unpacked content. To apply the patch, it uses parameters extracted from the spec file, but adds «--force» option and uses default system value for the «fuzz» option (remember that rpmbuild uses «--fuzz=0» by default). Currently the script only handles situation when tarball has a single top-lever folder; it will reject to work with tar bombs

If everything goes fine, then you will find a new patch (with the «.new» suffix) near the old one. rediff_patch folder with the whole content will be removed.

If something goes wrong (e.g., patch was applied only partially and some hunks failed) then you will be left with rediff_patch folder containing two subfolders — the original one and the new one, where a patch was tried to be applied So you will be able to analyze the reasons of failures and finish rediff manually.

As our practice shows, in reality most patches fail to rediff completely automatically, even with «--force» and more soft «--fuzz». But even rediff_patch managed to prepare a new patch for you, do not forget to check this patch carefully — '--force' sometimes leads to undesired results. But even if rediff_patch failed — well, at least we have saved some time, since it has unpacked tarball for us and performed a first attempt to apply the patch.

We hope that these two new scripts will make your life a little easier. Both scripts are easy to use and straightforward. As usual, comments, suggestions and patches are welcome.

Urpmi.recover - "Back In Time" For The Package Base

Many developers and advanced users often meet a need to rollback recently installed packages that introduced some undesirable updates to their system. this usually happens when you install packages from third party or testing repositories or from private repositories and containers where maintainers perform test builds of their packages. The latter case is quite common for ROSA QA team whose members often meet a need to rollback a set of packages installed for testing purposes.

Manual rollback is not very convenient, especially if the number of packages is huge and you are not completely sure which of them should be erased or downgraded to return system to a full-functional state. To be sure, in many cases all problems can be solved by urpm-reposync, but sometimes this tool is too powerful — it performs complete synchronization of your system and enabled repositories and it’s not easy to rollback only several packages.

A good news that now a niche between manual rollback and reposync usage is filled by urpmi.recover tool which is able to rollback your package updates. Urpmi.recover will help you to rollback your package base to certain date in the past or just rollback a given number of transactions.

Urpmi.recover is included in urpmi package and will be installed to your system with regular updates.

To be able to perform such a rollback, urpmi.recover stores old versions of updated packages in /var/spool/repackage folder. To start use the tool, you should first initialize the backup of old package versions by typing

# urpmi.recover --checkpoint

By typing this command, you say: «Currently my system is in stable state, but I am going to install dangerous updates. Please, starting from this moment, track all newly installed packages and backup the old versions in case of updates».

You can also run this command at any moment in future to rebase the stable system state. Every invocation of urpmi.recover --checkpoint will clean the /var/spool/repackage folder, so you won’t be able to rollback to earlier dates.

While package updates tracking is enabled, old versions of updated packages are stored in /var/spool/repackage subfolders corresponding to update date, so you can always rewise these old versions by yourself.

At some moment when you decide that it is time to revert your packages (at least to try to do it:)), simply say something like:

# urpmi.recover --rollback <timestamp>

You can specify timestamp in «seconds since the Unix Epoch», but feel free to use human-readable formats, e.g.:

# urpmi.recover --rollback "2014-03-07 13:20:47"

or even

# urpmi.recover --rollback "1 hour ago"

You can also rollback a given number of transactions by specifying --transactions option and passing number of transactions to be reverted to --rollback option:

# urpmi.recover --transactions --rollback <number_of_transactions>

For example, if you just updated a package a want to rollback this update, you can tell urpmi.recover to revert a single transaction:

# urpmi.recover --transactions --rollback 1

Finally, to completely disable repackaging and to clean /var/spool/repackage folder, just type:

# urpmi.recover --disable

This command will also clean up the /var/spool/repackage folder.

So these are the ways you can use urpmi.recover to rollback your package base. The tool is in experimental stage; we don’t guarantee absence of aerrors, so use it on your risk. though it should be said that before performing the rollback, urpmi.recover will provide you with details — which packages it is going to drop and which ones to downgrade, and you will be asked for confirmation. Finally, you can always use urpm-reposync in case of emergency.

One should also remember that maintainers usually don’t care about possibility to correctly downgrade their packages. So if new version of a package introduced some problems to your system, it is not necessary that rollback to a previous version will help to bring your system to a functional state.

Multiboot flash drive with several ROSA editions

Want to have a single flash drive able to boot several editions of ROSA? Sergey Zhemoitel provides a set of instructions on creating a multi-boot flash drive with several ROSA editions using grub4dos.

As an example, we will setup a flash able to boot 32bit and 64bit images of ROSA Desktop Fresh KDE. We assume that /dev/sdX is a device corresponding to your flash drive.

- Install grldr.mbr to flash MBR:

dd_rescue grldr.mbr /dev/sdX

- Create two partitions using fdisk or diskdrake:

- /dev/sdX1 - 200 Mb

- /dev/sdX2 - the rest of the device

- Format partitions:

- /dev/sdX1 - ext2 (grub4dos)

- /dev/sdX2 - ext4

- Copy grldr and menu.lst to /dev/sdX1

- In /dev/sdX2, create two folders for our ISO images:

mkdir -p rosa/kde/x86_64 rosa/kde/i586

- Unpack ISO images to corresponding folders

- Edit menu.lst and reboot.

menu.lst should look like the following:

default /default title ***** ROSA Linux KDE R2 x86_64 ****** root title ROSA install find --set-root --ignore-floppies /rosa/kde/x86_64/isolinux/isolinux.bin kernel /rosa/kde/x86_64/isolinux/vmlinuz0 root=live:UUID=40af22cf-3bab-48f4-841b-9d4fffdd87df rootfstype=auto ro rd.live.image live_dir=/rosa/kde/x86_64/LiveOS rhgb splash=silent logo.nologo install vga=788 initrd /rosa/kde/x86_64/isolinux/initrd0.img title ROSA Live find --set-root --ignore-floppies /rosa/kde/x86_64/isolinux/isolinux.bin kernel /rosa/kde/x86_64/isolinux/vmlinuz0 root=live:UUID=40af22cf-3bab-48f4-841b-9d4fffdd87df rootfstype=auto ro rd.live.image live_dir=/rosa/kde/x86_64/LiveOS vga=788 desktop nopat rd.luks=0 rd.lvm=0 rd.md=0 rd.dm=0 noiswmd splash=silent quiet logo.nologo initrd /rosa/kde/x86_64/isolinux/initrd0.img title Verify and Boot ROSA.Desktop.Fresh.R2.2012.x86_64 find --set-root --ignore-floppies /rosa/kde/x86_64/isolinux/isolinux.bin kernel /rosa/kde/x86_64/isolinux/vmlinuz0 root=live:UUID=40af22cf-3bab-48f4-841b-9d4fffdd87df rootfstype=auto ro rd.live.image live_dir=/rosa/kde/x86_64/LiveOS rhgb vga=788 splash=silent logo.nologo rd.live.check initrd /rosa/kde/x86_64/isolinux/initrd0.img title Install ROSA Desktop.Fresh R2 2012 in basic graphics mode. find --set-root --ignore-floppies /rosa/kde/x86_64/isolinux/isolinux.bin kernel /rosa/kde/x86_64/isolinux/vmlinuz0 root=live:UUID=40af22cf-3bab-48f4-841b-9d4fffdd87df rootfstype=auto ro rd.live.image live_dir=/rosa/kde/x86_64/LiveOS rhgb vga=788 splash=silent logo.nologo install xdriver=vesa nokmsboot install initrd /rosa/kde/x86_64/isolinux/initrd0.img title Rescue ROSA Fresh R2 2012 x86_64 find --set-root --ignore-floppies /rosa/kde/x86_64/isolinux/isolinux.bin kernel /rosa/kde/x86_64/isolinux/memdisk initrd /rosa/kde/x86_64/isolinux/sgb.iso title ***** ROSA Linux KDE R2 i586 ***** root title ROSA install find --set-root --ignore-floppies /rosa/kde/i586/isolinux/isolinux.bin kernel /rosa/kde/i586/isolinux/vmlinuz0 root=live:UUID=40af22cf-3bab-48f4-841b-9d4fffdd87df rootfstype=auto ro rd.live.image live_dir=/rosa/kde/i586/LiveOS rhgb splash=silent logo.nologo install vga=788 initrd /rosa/kde/i586/isolinux/initrd0.img title ROSA Live find --set-root --ignore-floppies /rosa/kde/i586/isolinux/isolinux.bin kernel /rosa/kde/i586/isolinux/vmlinuz0 root=live:UUID=40af22cf-3bab-48f4-841b-9d4fffdd87df rootfstype=auto ro rd.live.image live_dir=/rosa/kde/i586/LiveOS vga=788 desktop nopat rd.luks=0 rd.lvm=0 rd.md=0 rd.dm=0 noiswmd splash=silent quiet logo.nologo initrd /rosa/kde/i586/isolinux/initrd0.img

Note: the following parameters are obligatory:

- root=live:UUID=40af22cf-3bab-48f4-841b-9d4fffdd87df

- live_dir=/rosa/kde/i586/LiveOS

the former specifies a partition where the unpacked image resides, the letter points to a folder with squashfs.img file.

UUID can be obtained using blkid command:

# blkid <...> /dev/sdc1: LABEL="grub4dos" UUID="74e94dfa-6b1d-48ec-96bd-d96c66e55400" TYPE="ext2" /dev/sdc5: LABEL="flash" UUID="40af22cf-3bab-48f4-841b-9d4fffdd87df" TYPE="ext4" /dev/sdc6: UUID="2013-11-29-20-39-56-00" LABEL="ROSA.FRESH.KDE.R2.i586" TYPE="iso9660" PTTYPE="dos" /dev/sdc7: UUID="2013-11-29-17-24-42-00" LABEL="ROSA.FRESH.KDE.R2.x86_64" TYPE="iso9660" PTTYPE="dos"

Here we can see UUID of our partition with ISO images - "40af22cf-3bab-48f4-841b-9d4fffdd87df".

Mib-report - another tool to help maintainers

For tha last several months, we have actively used Updates Builder to update packages in our repositories. The tool works quite well and the number of packages updated by means of it during a month sometimes exceeds several hundreds. But the real life showed that one of the major weaknesses of such automated updates is not the tools themselves, but the data provided to them. The thing is that Updates Builder builds new packages on the basis of data provided by Upstream Tracker. The latter monitors upstream sites using URLs provided in spec files of RPM packages. However, these URLs are sometimes absent and even if they are present, they can be out of date (no wonder, since this information is rarely interesting for users, neither it is used in ABF, so maintainers often forget to update it). As a result, the number of "Available in Upstream" empty cells at ROSA Updates Tracker page is rather big.

But besides upstream monitoring, one can take a look at repositories of other distributions. And in this area a tool named mib-report can help. The tool was originally developed by our friends from Mandriva International Backports group. Its aim is to compare versions of packages in development repositories of ten Linux distributions:

- Rosa Desktop Fresh

- OpenMandriva Cooker

- Mageia Cauldron

- PCLinuxOS

- OpenSUSE Factory

- Alt Linux Sisyphus

- Fedora Rawhide (with RpmFusion)

- Gentoo

- Debian

- Ubuntu

MIB-report not only compares package versions, but for RPM-based distributions provides URLs to Source RPM packages:

$ mib-report --search firefox Searching for package firefox... Rosa: 25.0 http://abf-downloads.rosalinux.ru/rosa2012.1/repository/SRPMS/main/updates/firefox-25.0-1.src.rpm Cooker: 25.0.1 http://abf-downloads.rosalinux.ru/cooker/repository/SRPMS/main/release/firefox-25.0.1-1.src.rpm Mageia: 24.1.0 http://distrib-coffee.ipsl.jussieu.fr/pub/linux/Mageia/distrib/cauldron/SRPMS/core/release/firefox-24.1.0-1.mga4.src.rpm Fedora: 25.0 http://mirror.yandex.ru/fedora/linux/development/rawhide/source/SRPMS/f/firefox-25.0-3.fc21.src.rpm PCLinuxOS: 25.0 http://distrib-coffee.ipsl.jussieu.fr/pub/linux/pclinuxos/pclinuxos/srpms/SRPMS.pclos/firefox-25.0-1pclos2013.src.rpm Sisyphus: 25.0 http://mirror.yandex.ru/altlinux/Sisyphus/files/SRPMS/firefox-25.0-alt1.src.rpm Gentoo: 25.0 http://packages.gentoo.org/package/firefox Ubuntu: 25.0 http://packages.ubuntu.com/firefox Homepage URL: http://www.mozilla.com/firefox/

And inside SRPM package one can find a tarball with source code! So even if we can't automatically detect the freshest software version in upstream, we can take a look at versions available in other distributions. And if for some package their version is newer than ours, then we can take SRPM package and extract tarball with source code from it (in addition, it can make sense to updated URL field in our package).

Such a feature is currently been implemented in Updates Builder launchers - now they will take into account not only data from Upstream Tracker, but also information from mib-report. We would like to note that we only extract source tarballs from SRPMs taken from other distributions, but not the patches (since it is hard to understand without human help if a patch is relevant for ROSA or not) and not spec files (since we believe that our spec files are one of the simplest and clearest among RPM-based systems, and there is no need to overload them with tons of unnecessary constructions used in many other distributions).

In general, if to update a package we should just rebuild it using new upstream tarball, then why not to give this task to automated scripts? For human beings, we can find more interesting tasks that will be more useful for upstream developers and distribution users. Finally, we believe that upstream developers are more interested in patches and improvements from distribution maintainers, and not in contests devoted to the speed of detection of new upstream releases.

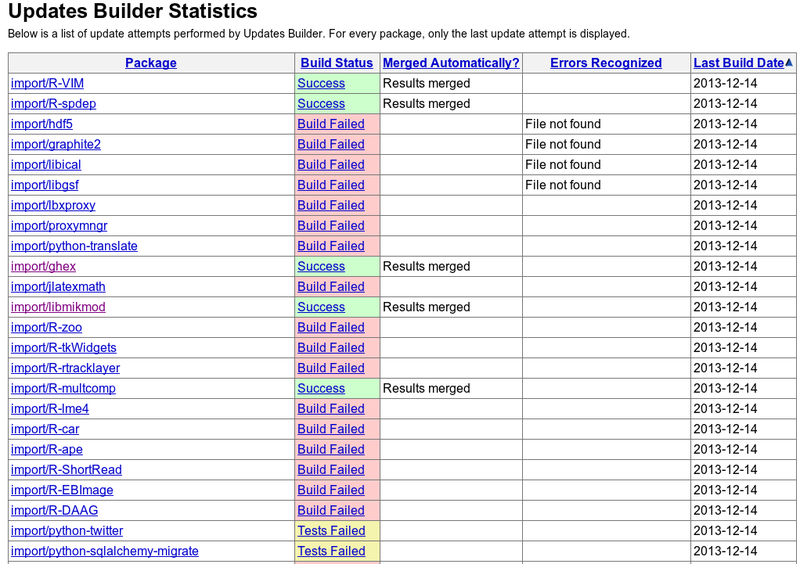

Updates Builder - Visualization of Update Results

As many of ROSA Planet readers likely know, some packages in ROSA and OpenMandriva repositories are updated to new upstream versions automatically by means of Updates Builder (to be more precise, this tool detects new upstream releases and tries to build them in ABF).

List of tracked packages can be found in wiki - here is the one for ROSA and here is the one for OpenMandriva. These lists are quite large, but in reality for some packages upstream releases happen rarely, for others Updates Builder fails to detect new releases... So what is the real ammount of work performed by Updates Builder in ABF?

Maintainers and other ABF users can estimate this ammount on the basis of general build statistics and task monitoring, but there is an easier way. The thing is that the scripts that launch Updates Builder create reports concerning results of their work and publish them at upstream-tracker.org. A page with results for ROSA is located here, the one for OpenMandriva is here.

Column names are in general self-explaining, but I'd like to give several comments for some of them.

The Merged Automatically? column can be populated with data only for successful builds. It shows if Updates Builder has already merged new version to the Git repo of target distribution by itself. Such completely automated merge is performed only for packages from Contrib repository. For packages from the Main repo maintained by ROSA people, Pull Requests are sent to merge the auto_update branch (where Updates Builder performs all its tasks) to the Main one.

The Errors Recognized column tries to reflect reasons of build failures. The Updates Builder launchers analyze logs of failed builds and recognize the most common failure reasons (failed patch, missing file, etc.). Curently this analysis is quite simple and less then a dozen reasons are recognized, but in future we plan to improve this classification.

Finally, the Last Build Date column contains a date when the last attempt to update the package was performed. For every package, the table contains inly a single row corresponding to the last update attempt.

I hope that these pages will give you a general idea of what Updates Builder is working on. Note that these reports reflect only initial results of Updates Builder work. If after analysis of these results a maintainer fixes builds of updated versions of some packages and push these updates to reporitories, thee actions will not be reflected in the report.

NrjQL kernel - the "heart" of ROSA

Kernel is the core of any Linux distribution and it is the kernel that gave name to all these systems. Linux kernel contains a lot of configuration options and you can get very different kernel images from the same source code. In addition, there are a lot of patches all over the worlld that are not included to the main development tree but that can be interesting for certain groups of users. So it is no wonder that different Linux distributions even if based on the same version of source code from Linux Torvalds provide users with quite different kernels,

In ROSA, we use the kernel variations initially created by the MIB (Mandriva International Backports) group and named nrj and nrjQL. What do these names actually mean and what features stand behind them? Let's listen to Nicolò Costanza who builds kernels for ROSA Desktop distributions:

Technically speaking, with the "NRJ" configs the CPU and RCU are configured with full Preemption enabled (CPU Preemption, RCU Preempt tree) and in full Boost mode. The "QL" mode also adds the CK1 patchset including BFQ disk I/O scheduler and BFS CPU load scheduler. One should also mention the presence of UKSM for a better memory manager and TOI for better suspension and hibernate functionality, each designed with desktop system performance and responsiveness in mind. So we really try to do everything to obtain a feeling close to realtime kernel from user point of view.

What are the roots of the name?

We looked for a small nickname (2 to 4 chars) for our kernel variation. Several names were proposed and at the end the most of MIB people liked NRJ, Amongst the another proposals there was kernel-viagra, but this was rejected:) The NRJ word actually stands for ENERGY and should underly that the kernel is acting for a PC like an energetic drink do for a human being, like a RED BULL, but we could not adopt that name or another registered trademark. In the case when PC is very stressed by different tasks but a slow response is unacceptable, NRJ try to offer the missing ENERGY to your PC. With NRJ the PC is more responsible in a multitasking experience.

So here is the short description of the kernel used in ROSA.

Actually we have several variations of the kernel - Nico always builds at least vanilla, nrj-laptop and nrj-desktop editions. The process of Nico's work can be always tracked at Nico's repository in ABF. Finally, more details about history of nrjQL patches in ROSA can be found in MIB forum.

Linux Kernel ABI Tracker

The Linux kernel is constantly evolving: improved hardware support, optimized various kernel subsystems, etc. As a result, the size of the kernel and the number of its ABI/API interfaces is growing from version to version. Thus, in the course of development, some of the old interfaces are subject to change, which may break the operation of other software components using kernel interfaces: kernel modules, drivers, dynamic testing/trace tools, etc.

For regular automatic analysis of changes in Linux kernel interfaces we have developed the Kernel ABI Tracker. This tool is looking for new versions of the kernel at kernel.org, building them and analyzing API changes using a set of basic tools. For each version of the kernel the tracker creates a so-called ABI dump from its debug-information using the ABI Dumper tool. ABI dump contains information about all public interfaces exported by the kernel: their properties, parameter types and the structure of data types. And then a pair of ABI dumps for two consequent kernel versions are passed to the ABI Compliance Checker tool in order to analyze changes and create the report. This report describes changes in the kernel API interfaces (added and removed symbols, changes in data types, etc.) and divides them by the severity level for applications. In addition to its direct purpose, ABI dumps may also be used for other kinds of analysis by third-party developers and are therefore available for download.

The tracker provides reports on the results of testing defconfig-configuration of all the latest longterm, stable and mainline kernel versions. The home page shows a plot of the number of interfaces depending on the version of the kernel. The results so far obtained for the two architectures: x86 and x86_64. Support for arm architecture is planned in the near future. Also planned testing of other kernel configurations (allyesconfig, etc.).

Basic tools ABI Dumper and ABI Compliance Checker, previously developed in the ROSA laboratory to track ABI changes in C libraries, have required significant improvements to be able to analyze changes in Linux kernel. The problem was in the huge depth of data structures and a big number of kernel interfaces, so both tools required too much cpu time and RAM to operate. As a result, the tools now are faster in the analysis of large input objects.

Towards the Better Packages - Rpmlint News

Rpmlint is one of the major tools used by every ROSA maintainer to control package quality. This tool checks whether packages meet the distribution packaging policies. It is launched automatically at the end of every build performed in ABF. In addition, we constantly monitor ROSA repositories with Rpmlint andpublish results at the FBA site.

Packaging policies are not carved in stone and are subjected to changes as the distribution evolves. From time to time new ideas come to developers' minds about new useful checks that could be implemented. As a result, Rpmlint is also constantly evolving. Not long ago we performed a significant refactoring of its code and dropped some checks that are not relevant for ROSA anymore - such as invocation of ldconfig in post-scripts, definition and clean up of BuildRoot and so on. These checks have not been used for a long time, but they made the code larger and decrease the speed of the tool.

Besides code clean up, we have implemented some new interesting features in our Rpmlint.

First of all, we have implemented stricter checks for packages containing executable files or libraries with RPATH parameter. This parameter affects the order of folders where the search is performed by dynamic loader when looking for libraries during application start - the paths listed in RPATH are looked in the first order. This feature should be used with care, especially in libraries that can be used by other distribution components. In ROSA, if you need to use RPATH in your package, it is recommended to put all libraries which should be accessed using RPATH in a separate subfolder of the /usr/lib directory (it is recommended to name this subfolder in the same way as the package itself - e.g., /usr/lib/fglrx contains libraries specific to fglrx). If RPATH points to a directory which contains libraries not expected by maintainer, then application behavior can become surprising and unpredictable. A common example of such situation is the case when RPATH contains system directories such as /lib or /usr/lib. As the practice shows, adding these values to RPATH can have fatal consequences - for example, we have recently fixed a problem with Empathy which failed with segmentation fault on a system with Nvidia drivers due to RPATH set in libwebkit library (used by Empathy). From now on, Rpmlint automatically detects such situations at the end of package build and stops the build with a non-zero exit code if it detects a shared library or executable file that have RPATH containing /lib, /usr/lib, /lib64 or /usr/lib64.

Next, by request of our Russian localization team, we have added checks for untranslated names and comments in package desktop files. Localization of such files is very important since they are used to provide user with information about application that will be launched by clicking on particular icon in SimpleWelcome or RocketBar. Since these checks are used by Russian localization team only, we have not included them to the main branch of Rpmlint shipped with ROSA distributions. The checks are used only when launching tests at FBA and their results can be observed in corresponding sections of the site. For example, for the main repository of ROSA Desktop Fresh R1:

The same checks can be easily implemented for other languages if corresponding localization teams are willing to monitor and fix the whole set of desktop files.

Finally, Rpmlint can now check correctness of package suffixes. In ROSA, packages of every distribution version have their own suffix (to distinguish packages from different versions) which is automatically set by ABF when package is built. However, it sometimes happens when a package is not built in ABF but copied to repository manually from corresponding repository of a previous ROSA version. This is usually used in cases when packages have cycled build dependencies, and the easiest way to rebuild such package for a new distribution version is to use uts dependencies from the previous one. Normally such "manually added" packages are dropped from the repository as soon as they are no longer needed. However, it sometimes happens that maintainers forget to drop the old package so it remains in the repository like a garbage. It would be nice to automatically detect packages with wrong suffixes in repositories, and no this possibility is implemented in Rpmlint by means of non-standard-distsuffix check. The list of allowed suffixes is defined in Rpmlint settings, in the ValidDistSuffixes parameter.

WiFi and Broadcom - Handling the Errors

Two bugs related to error handling have been fixed by our developers in the proprietary driver for Broadcom WiFi adapters (broadcom-wl, a.k.a. broadcom-sta, a.k.a. wl). Both problems (#2146, #2667) lead to kernel crashes at boot time on the laptops of several our users.

By the way, not all major Linux distributions have these problems fixed at the time of writing.

LinuxCon Europe 2013 - About Data Races in the Linux Kernel

October 22, 2013 - Eugene Shatokhin, one of our developers, gave a talk devoted to data races in the Linux kernel modules at LinuxCon Europe (slides, notes for the slides).

Such errors could be very hard to find and their consequences may vary from negligible to critical. Hunting the races down is especially important for the Linux kernel, where, for example, the code of the drivers can be executed by many threads at the same time. Now add interrupts and other asynchronous events and remember that the synchronization rules for the data are not always fully described (if described at all)...

Most of the talk was about the tools that can detect data races in the Linux kernel. KernelStrider and RaceHound tools, Eugene was one of the main developers of, were covered in more detail.

KernelStrider collects data about the operation of the kernel component (e.g. a driver) under analysis in runtime. The information about memory accesses, allocations and deallocations, locks and unlocks, etc., is then analyzed in the use space by ThreadSanitizer (Google). The algorithm of searching for races is briefly described here.

KernelStrider may issue false alarms in some cases. For example, false alarms happen when a network driver turns off interrupts in hardware and then accesses some common data without the risk of conflicts with the interrupt handlers.

RaceHound tool allows to check the warnings about data races issued by KernelStrider and find real races among them. RaceHound works as follows.

- A software breakpoint is placed on an instruction in the binary code of the driver that may be involved in a data race.

- When the breakpoint triggers, RaceHound determines the address of the memory area which is about to be accessed by the instruction. Then it places a hardware breakpoint to track the accesses of the needed kinds (writes only or both reads and writes) to that memory area.

- A small delay is made before the execution of the instruction.

- If some other thread accesses that memory area during the delay, the hardware breakpoint will trigger and RaceHound will report a race.

That is, KernelStrider plays the part of a "detective" or an "analyst" here and narrows the range of "suspects" - the fragments of the code possibly involved in data races. RaceHound is then a covert monitoring system tracking these suspects. If it catches a suspect "red-handed", everything is clear, the "crime" (data race) is confirmed.

There were many questions asked both during the talk and after it. Among other things, the audience was interested in the following.

- Plans to support ARM (the mentioned tools currently work on x86 only) - may be later, not in the near future.

- Situations when KernelStrider misses data races - yes, this is possible in some cases, mostly due to how ThreadSanitizer works as well as due to sometimes inaccurate event ordering rules used.

- Support for suspend/resume in KernelStrider - yes, KernelStrider operates during suspend and resume too.

- Support for analysis of the kernel proper rather than the modules in KernelStrider and RaceHound - not implemented at the moment.

- Instrumentation of the code to be analyzed during the compilation rather than during its loading as KernelStrider does now - may be beneficial, it is actually one of the future directions of the development.

- and so on.

The developers from Intel actively participated in the discussion of the races found by the tools mentioned above. It is no surprise because these races were found in the network driver e1000, created by Intel. A strange thing became obvious during that discussion: it is a common practice in the network drivers not to use synchronization in some cases even if a race may happen as a result (and the races were actually found there). This is the case, for example, for NAPI and some of the functions involved in data transmission. This is probably to avoid performance losses due to locking but the estimates of such losses as well as the guidelines how to avoid problems there are yet to be found.

It seems that many kernel developers share the following attitude to the data races:

- Have you observed any particular problems due to that race? Has anything crashed or otherwise worked wrong?

- Not yet.

- Oh, well.

And nothing happens then.

Reasonable? Perhaps, but if one remembers this article, for example, the reason becomes less certain.

More GRUB2 upgrades and bugfixes

Out developers continue upgrading the GRUB2 bootloader.

There were 14 patches made and token into the upstream.